-

Rumors from TSMC

Apple can be getting 100% of TSMC made 3nm chips for up to 12 months. No other company will have any significant part (except test batches and very custom low amount chips).

For Apple 3nm is now absolutely required.

-

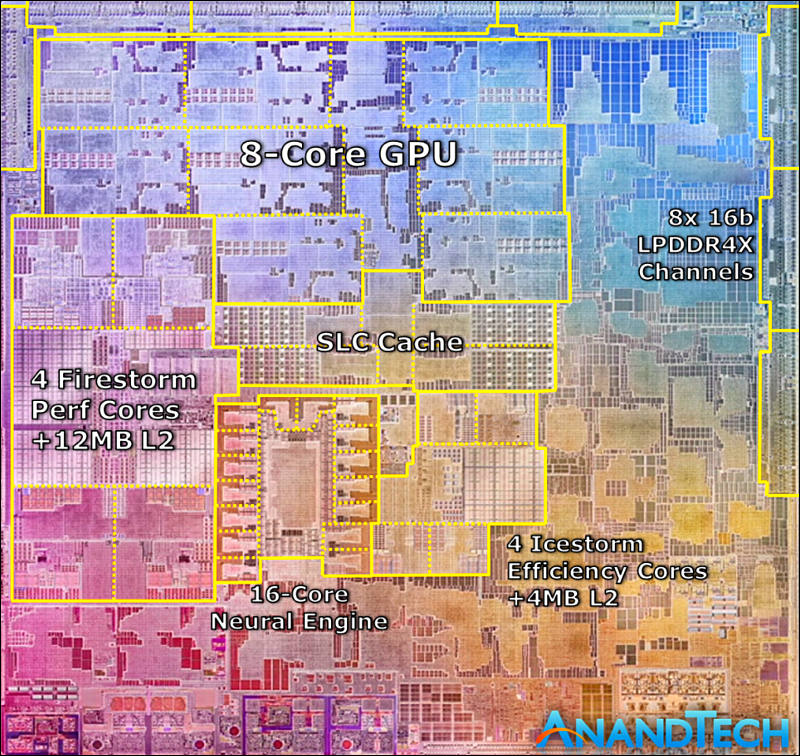

M1 CPU structure and on M1 RAM

Note that M1 CPU actually not only use much faster RAM chips, but also has double width bus.

So compared to double channel DDR4L-2400 typical Windows note this one has 3.5x more throughput.

It is very big.

sa15774.jpg800 x 756 - 196K

sa15774.jpg800 x 756 - 196K -

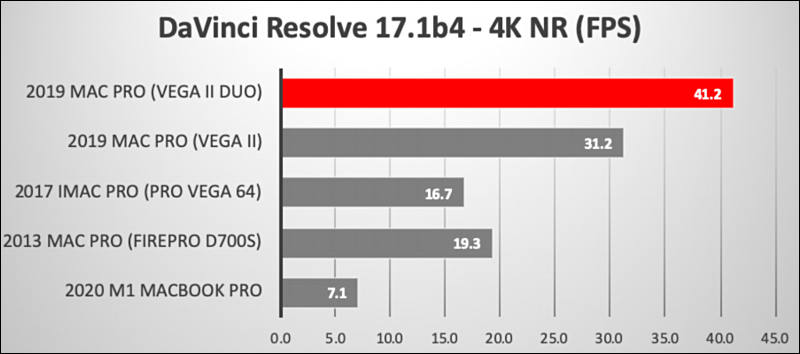

Resolve test is really bad as it has no relation to real projects and work.

Piget guys do even worse things, like if they want proof how you need 2 GPUs and 32 core CPU they just put 20 abnormal selected nodes, like 5 blurs,3 noise reductions one after another and so on, and test it all in the least compressed Sony 8K raw files (to make it much less optimized than RED). All this while project lacks any cuts, normal real life color grading and so on.

Because if they will make it real life project in 4K or 1080p, normal life very few nodes, more tracks, mostly simple cuts and so on and H.264 or HEVC export - it will be all bad for selling top stuff.

sa15775.jpg800 x 354 - 35K

sa15775.jpg800 x 354 - 35K -

A test on Davinci Resolve. I would like to see some test with a Macbook Pro with eGpu.

https://barefeats.com/m1-macbook-pro-vs-other-macs-davinci.html

-

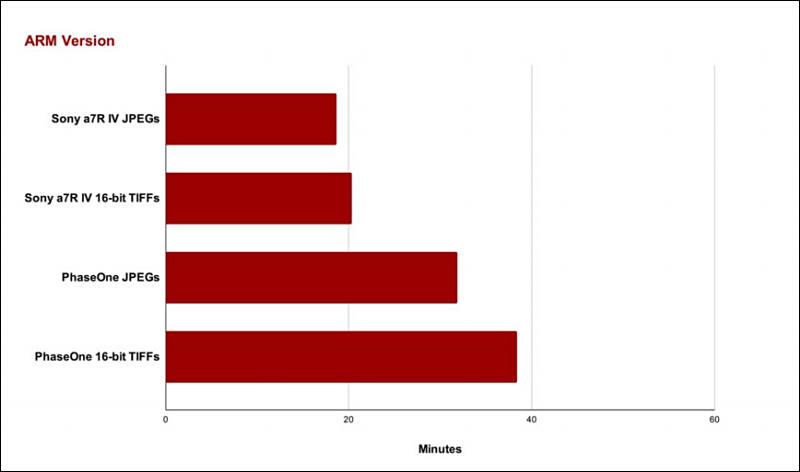

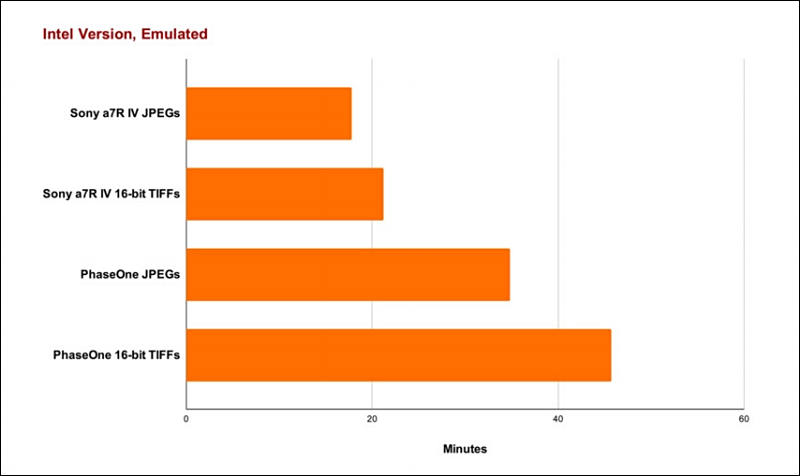

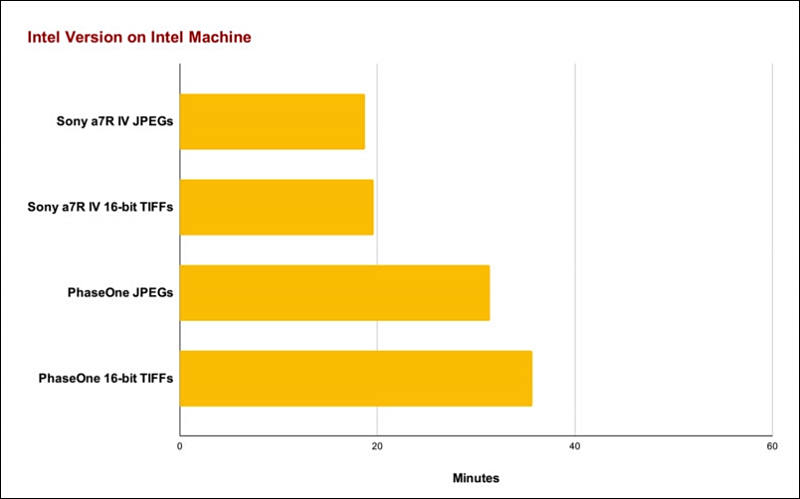

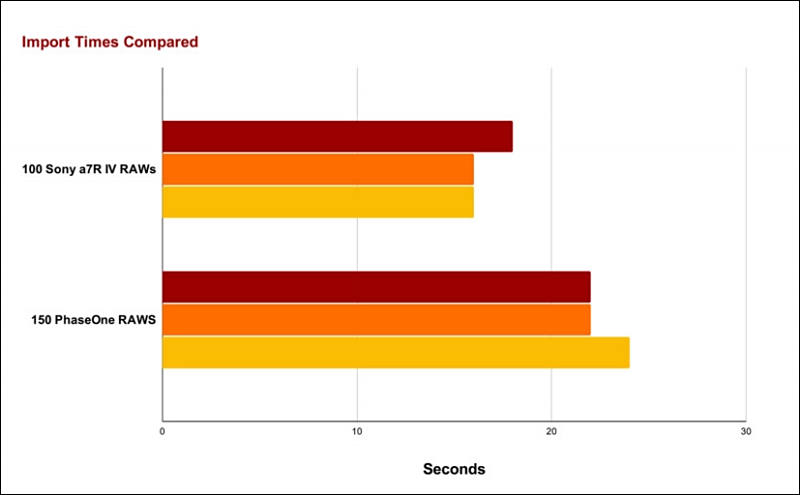

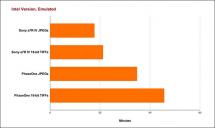

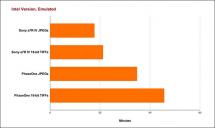

More down to earth results - Adobe Lightroom

https://petapixel.com/2020/12/08/benchmarking-performance-lightroom-on-m1-vs-rosetta-2-vs-intel/

This is interesting, as it is sample of app that do not use fake GPU help (that add up lot of "performance" in other benchmarks) during CPU operations and do not use newer video import/export engines. And results are not good, it seems like M1 CPU by itself without big help from manual optimization do not add a lot, contrary to APple claims.

sa15765.jpg800 x 472 - 28K

sa15765.jpg800 x 472 - 28K

sa15766.jpg800 x 476 - 30K

sa15766.jpg800 x 476 - 30K

sa15768.jpg800 x 499 - 30K

sa15768.jpg800 x 499 - 30K

sa15767.jpg800 x 495 - 32K

sa15767.jpg800 x 495 - 32K -

This is that all talk about

Apple Inc. is planning a series of new Mac processors for introduction as early as 2021 that are aimed at outperforming Intel Corp.’s fastest.

Chip engineers at the Cupertino, California-based technology giant are working on several successors to the M1 custom chip, Apple’s first Mac main processor that debuted in November. If they live up to expectations, they will significantly outpace the performance of the latest machines running Intel chips, according to people familiar with the matter who asked not to be named because the plans aren’t yet public.

Apple’s M1 chip was unveiled in a new entry-level MacBook Pro laptop, a refreshed Mac mini desktop and across the MacBook Air range. The company’s next series of chips, planned for release as early as the spring and later in the fall, are destined to be placed across upgraded versions of the MacBook Pro, both entry-level and high-end iMac desktops, and later a new Mac Pro workstation.

The road map indicates Apple’s confidence that it can differentiate its products on the strength of its own engineering and is taking decisive steps to design Intel components out of its devices. The next two lines of Apple chips are also planned to be more ambitious than some industry watchers expected for next year. The company said it expects to finish the transition away from Intel and to its own silicon in 2022.

The current M1 chip inherits a mobile-centric design built around four high-performance processing cores to accelerate tasks like video editing and four power-saving cores that can handle less intensive jobs like web browsing. For its next generation chip targeting MacBook Pro and iMac models, Apple is working on designs with as many as 16 power cores and four efficiency cores.

Apple could choose to first release variations with only eight or 12 of the high-performance cores enabled depending on production. Chipmakers are often forced to offer some models with lower specifications than they originally intended because of problems that emerge during fabrication.

For higher-end desktop computers, planned for later in 2021 and a new half-sized Mac Pro planned to launch by 2022, Apple is testing a chip design with as many as 32 high-performance cores.

Apple engineers are also developing more ambitious graphics processors. Today’s M1 processors are offered with a custom Apple graphics engine that comes in either 7- or 8-core variations. For its future high-end laptops and mid-range desktops, Apple is testing 16-core and 32-core graphics parts.

For later in 2021 or potentially 2022, Apple is working on pricier graphics upgrades with 64 and 128 dedicated cores aimed at its highest-end machines, the people said. Those graphics chips would be several times faster than the current graphics modules Apple uses from Nvidia and AMD in its Intel-powered hardware.

Interesting Bloomberg article mostly written by Apple PR team, they do it regularly in last 2 years.

Apple feels bad vibes of big corporations and clients and they need to shut this up while their teams are urgently thinking on solution.

But here comes the hefty price of the approach that Apple now going, any small error, issue on TSMC and it'll be disaster.

Even this year, if not extreme attack on Huawei, Apple won't be able to get any volume of M1 chips. In reality all 100% of M1 production lines and 35% of iPhone 12 chips lines are the lines that Huawei paid for and reserved at TSMC.

-

@radikalfilm Not to hijack this thread but take a look at this project:

https://www.cubbit.io/one-time-payment-cloud-storage

https://www.hackster.io/news/take-advantage-of-distributed-cloud-storage-with-cubbit-357b4498ade4

-

Talked to engineer who worked in Taiwan, including design of older MB motherboards

He told that Apple made biggest and most staggering error. As tactically it can look as small win, but in the long term Apple will be required to keep two fully separate lineups - for notebooks where progress will stop fast just due to issues with smaller processes and for desktops where Apple will need fully parallel x86 software.

He is afraid that present Apple managers want to abandon all pro desktop market as Apple have absolutely no ability to make and update multiple top desktop class CPUs (as they are very costly to make and Apple hold tiny niche only).

On other hand we know that Apple have quite big engineering and developers team working on mobile Ryzen based notebooks and desktops. We can see notebooks with 16 core H class from 45 to 90 watts CPU as soon as next year.

-

The fact remains, however that this is a nice step for blazing fast and intuitive non-linear-editing of video in extremely hi-res formats for $1,000 or less

Presstitutes and mass media already told you that around thousand times, don't they?

Things I am talking are most important, small performance gain is not.

And I have no idea why I will want to edit even 4K video on small laptop until I am in some distant trip in hotel (COVID mostly taken care about this one option).

-

For example, both Microsoft and Apple hire big>gest amount of people to teams who will be developing systems that digg through your files in their clouds (nice term for this - textual and visual based AI). Analyzing stuff, figuring that is risky for company where you work or for ruling class in general, reporting all real progress to your boss (company will have special rate to buy info about you). It will also check all video files and photo files, all names of people (via faces) and content will be collected indefinitely, reported and sold.

Another thing I hear is that both companies already have AI that can check all major file formats and reject any encrypted files or archives. Idea is to finally get into bureaucracy dream land, where files are encrypted but they know the key (both Microsoft and Apple clouds already work such - both lack any private encryption).

Both clouds also have very interesting new strategies. Like I already heard that bank insisted on medium (around 150 people) US company to move into specific cloud in order to get new round of credit."

@Vitaliy_Kiselev those are all valid societal concerns, I suppose, with regards to surveillance taking over our lives and tech monopolies and what-not. The fact remains, however that this is a nice step for blazing fast and intuitive non-linear-editing of video in extremely hi-res formats for $1,000 or less, which seems like a step in the right direction from a filmmaking perspective.

A kid with an iPhone and a MacBook is Coppola's proverbial 'fat kid in ohio' example, now, and in theory great things can come of that. I suppose in practice, it'll be like the DSLR revolution, a few folks made features that might not have otherwise, mostly no one did shit except YouTube camera tests. Plus we can throw in your dystopian tech company controlled world to boot.

Still, though, haha...they're damn fast and cheap for Apple, and FCPX is life changing in ways people still don't get.

-

Rumors are that Apple will throw up to 30-40 USD billions to sponsor development of adapted software, including adding features to existing iPad aps.

Each of companies will sign NDA and will be provided with multiple high skilled consultants and developers, Apple will also open and provide highly optimized private libraries for some of them.

Important part of agreement will be full withdrawal from independent software distribution and using only Apple store.

If company will move to monthly subscription they'll get big real money bonus from Apple (as Apple will be getting 30% cut each month it is VERY beneficial to Apple).

-

Oh oh dark cpu. Come on. Let's use the power of imagination! M2 will beat the sh!t outta M1!

-

Make sure to provide some summary to the links, do not post link alone.

One important moment that author lost is that RISC architectures always had their bright moments and after this fade again into oblivion (and mobile space).

For stationary powerful computer it is ALWAYS preferable to have CISC architecture.

Reason being that with same processor cache and same memory throughput you will always get more done on CISC machine (as size of overall instructions is smaller). In exchange you essentially have translator inside CPU, so it is less energy efficient.x86 lead originate also from very slow memory speed progress.

Note how Apple uses fastest widely available DDR4L memory (memory in most Windows laptops is much slower, 1.77x times slower, for top gaming and performance notes it is also 30% difference). If they had cheap 2400Mhz chips the M1 performance had been much worse. The hit will be much larger compared to x86 chips.

Apple also seems to have quite big cache that is also shared between CPU and GPU ( benchmarks properly optimized by Apple will hide this). Having exclusive access to 5nm process they could do it.

Apple also specially optimize both software and all leading benchmarks to have as much as possible inside cache, and also have as small and as efficient code as possible where it is important. They have huge money for this, rumors are that M1 total development cost is less than 10% compared to money they spent on optimizing and adapting software and paying to third party developers.

This is where we finally see the revenge of RISC, and where the fact that the M1 Firestorm core has an ARM RISC architecture begins to matter.

You see, for x86 an instruction can be anywhere from 1–15 bytes long. On a RISC chip instructions are fixed size. Why is that relevant in this case?

Because splitting up a stream of bytes into instructions to feed into 8 different decoders in parallel becomes trivial if every instruction has the same length.

However on an x86 CPU the decoders have no clue where the next instruction starts. It has to actually analyze each instruction in order to see how long it is.

The brute force way Intel and AMD deal with this is by simply attempting to decode instructions at every possible starting points. That means we have to deal with lots of wrong guesses and mistakes which has to be discarded. This creates such a convoluted and complicated decoder stage, that it is really hard to add more decoders. But for Apple it is trivial in comparison to keep adding more.

In fact adding more causes so many other problems that 4 decoders according to AMD itself is basically an upper limit for how far they can go.

This is what allows the M1 Firestorm cores to essentially process twice as many instructions as AMD and Intel CPUs at the same clock frequency.

This part is some mangled mess, as first he correctly said that ARM instructions are usually shorter, but thing is that to do same real operations total instructions number and total length of them will be MORE. Btw ARM instructions are generally not fixed length is you take all extra stuff, DSP and so on. ARM is also very complex.

Complex decoders are not a unsolvable problem. And note that x86 CPUs are also very smart and complex, for example CPU can cache the instruction length for code he recently saw.

We had similar period in 90s and early 2000s (remember PowerPC and stuff), where all presstitutes published countless articles on how x86 is dead and how RISC is total future for everything. But time proved them wrong.

Guy also do not understand that M1 is made using most expensive and most advanced available tech, tech that US even want to fight for using military forces and that they prohibited openly to use to Huawei. It is also must be noted that Apple M1 is made using low power version of TSMC 5nm, so it does not scale at all. M1 is operating on absolute top margin of transistors stability. Contrary to x86 product that will follow.

As soon as Apple will try to move to 5nm high performance version (it does not exist for now in mass production version) the energy efficiency for same performance will instantly drop 30-45%.

-

My point is that I am sure you propose superb products.

But I play Devil's Advocate role here going little extreme in responses, but still reasonable.

-

@Vitaliy_Kiselev what was your point again? I fully lost track. I think it was "MacOS is bad because ARM Mac hardware is now too good" or something. So don't use the Mac because you get used with the good and then they get you. No deviance or solution is permitted, because it's your personal view :) The only reason I poke the bear is because it reminds me intelligence and reasonableness are at odds with each other.

-

NextCloud isn't just remote data storage. That's like saying a house is its foundation. It has email, Office suite, (video) conferencing, text chat, everything the Google cloud or Office365 offers to users.

I don't need any of that.

The point of a cloud is to access computing services remotely, not remote data backup. Remote data backup has existed for 20 years, way before anyone's cloud.

Clouds started as way to store data reliably, and no, remote backup that had distributed data among centers located in different places all around the world with complex algorithm to have redundancy and so on was not present 20 years ago on the market.

After this fast it became a way for corporation to reduce expenses on infrastructure, hence providing instances.

All else just followed on third step as way to increase income from already existing infrastructure.

I suggest QNAP's HybridCloud, a free service on the QNAP OS. You keep the data on a QNAP NAS on your premises/home, and access it from anywhere (icluding mobile) through an account at qnapcloud.com. There is some setup involved, but doesn't require massive IT skills. It's analogous with Microsoft and Google Drive, a live filesystem you can mount.

And one nice day QNAP will be bough, in months time your remote access will cease to work and at one year time all OS patching and further development will stop. Thanks.

Btw, same is true for all but few top Linux and BSD distributives.

-

@Vitaliy_Kiselev NextCloud isn't just remote data storage. That's like saying a house is its foundation. It has email, Office suite, (video) conferencing, text chat, everything the Google cloud or Office365 offers to users.

The point of a cloud is to access computing services remotely, not remote data backup. Remote data backup has existed for 20 years, way before anyone's cloud.

For in-between requirements (remote data access, without the computing) I suggest QNAP's HybridCloud, a free service on the QNAP OS. You keep the data on a QNAP NAS on your premises/home, and access it from anywhere (icluding mobile) through an account at qnapcloud.com. There is some setup involved, but doesn't require massive IT skills. It's analogous with Microsoft and Google Drive, a live filesystem you can mount.

"And still any of this guys can index your data, sell it for NN training and do any stuff they want." You exchange money with them on the promise they won't do any of that. The only way to make sure is to run your own cloud.OTOH these are small guys, your typical web hosting provider. They don't have the scale to mine data and turn a profit from it even if they wanted to. Also if such data sale is discovered, they destroy their business model in 3 seconds, and as a client you can sue them.

-

I don't need my own cloud, as much simpler solutions exist for backup and sync of files at one server. And if I have my own server - the simple the solution - the more safe are my data. Whole thing of cloud is ability to store stuff at large amount of servers in distributed way.

Not free service in exchange for your data privacy. Authorities can still get your data with an injunction, but at least your data isn't mined and tracked.

And still any of this guys can index your data, sell it for NN training and do any stuff they want.

-

But you gotta cache desired data from your cloud to your machine to consume it.

-

@Vitaliy_Kiselev then you're not understanding what NextCloud provides. Conditional on one's IT proficiency, you have the option to run your own and have the same functionality as Google's cloud, under your own domain. It's work, but you have full control over the data. This is one extreme. It can be hooked into the /e/ OS (the de-googled Android) instead of the Google account.

If you just want to escape iCloud or Google Cloud data mining, then select one of the SaaS providers from here https://nextcloud.com/signup/ , which provide service in exchange for money, not free service in exchange for your data privacy. Authorities can still get your data with an injunction, but at least your data isn't mined and tracked.

-

I see no reason to run cloud on my own server (as it kills the cloud purpose).

In all other cases it is owners of hardware and firm who can see all your stuff.

-

NextCloud is open source. If one is determined enough one can build it from source and run it on their own servers, with Elbrus CPUs. Good enough for you?

-

No, NextCloud is not solution, as any other more or less large cloud, as they have all same core investors (banks and invest funds).

And, as I told, computer is not knife, you can't use it like such. It'll kick and will win in the end for 99,9% of users. As it is lot of knowledge and hassle to just keep the level we had 2-3 years ago.

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,990

- Blog5,725

- General and News1,353

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,367

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm101

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,319