I call BS on the "Cadence Problem"

-

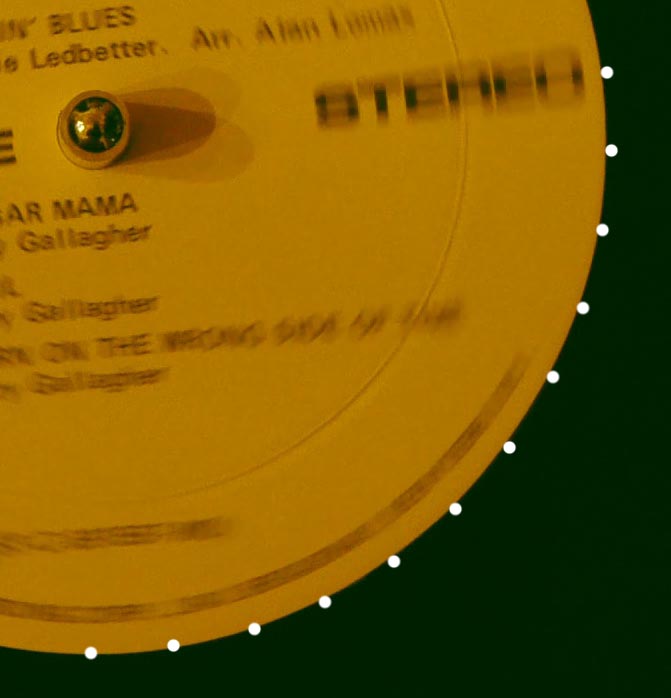

I ran a little test to check the GH2 cadence at 24p. I captured a record spinning at 33 RPM on a turntable. The picture shows one frame - I added marker dots from the next 11. As you can see, the dots are evenly spaced and at the right angle. Not a super precise test, but good enough.

Chris -

U DA MAN!!!

-

@cbrandin

I think this is a good idea! I wonder if there is a way to know the precision of your measurement?

Do you think rolling shutter have an impact on precision?

I use a strobe to tune my guitar and it is extremely accurate. Your method to judge cadence would seem to using a somewhat similar principle.

It might be possible to judge GH2 cadence with more precision using a digital metronome - one that flashes at consistent rates (1/24, 1/48, 1/30, 1/60, etc.). I would hesitate using a mechanical metronome due to rolling shutter.

[Yes. FWIW, rather than relative picture quality of 32/42MB, I mostly noticed the curving metronome bar in those pictures mpgxsvcd posted in the "Low GOP truthfulness" thread.]

-

I'm a little slow. What is this supposed to be indicating? Is this a frame grab from the HDMI output or something?

-

@aria

People have speculated that there is an uneveness in the rate at which the GH2 captures video, creating odd jutters and stutters. This was to prove that the GH2 capture rate (cadence) is correct and constant.

@v10tdi

A digital metronome just flashes, so it won't work. You need something in motion. Rolling shutter has nothing to do with cadence. Why bother with more precise tests? You'll never see a difference if you can't actually see the difference.

The problem is that there are several factors that affect what motion looks like. To get anywhere you have to figure out how to test each element separately. If you believe, for example, that cadence is off and are wrong, you and everybody who believes you will be wasting time speculating about something that isn't true.

Chris -

Chris,

Thank you for posting this. I read your post several days ago regarding this test and thought it was an excellent idea.

As I mentioned earlier, this topic seems to come up with evey damn video camera that hits the market.

thanks again! -

What was the shutter speed of the GH2? I notice odd jutters but only at higher shutter speeds, like 1/500. I am sure shutter speed has a bearing on this somehow, with some effecting the output and some not.

-

Do you have a turntable? This is not a difficult test. Just make sure you set your editor to native 23.xxx mode. Shoot a spinning record and look at the rotation at each frame.

High shutter speeds might cause a judder of another kind, just not actually a cadence problem. With high shutter speeds you get much more detail and the codec can run out of steam toward the lower part of the frame - especially with P and B frames. In that case the codec will simply skip encoding macroblocks because it has run out of bandwidth, and what you'll get is whatever was in the last frame, or in some cases the last I frame, or mud in the case of I frames (in extreme cases). Somebody posted a remarkable video a while back showing this. It was a video of grass waving in the wind and water flowing in the lower part of the frame. The grass looked great, but the water stuttered along at 1/2 second increments. The water wasn't being properly encoded for a couple of reasons - but 90% of the reason was because it was in the lower part of the frame (the other reason was because it was much lower contrast than the grass). If you consider that one of the side effects of low GOP is to starve frame bandwidth, it could be very possible to get this effect unless you are capturing at extremely high bitrates. I don't think this can be tested with a turntable, though - not enough detail in the right places.

Chris -

I found the video from @bdegazio - look at the water just left of the log in the first part of the video (pretty much all around the logs, actually).

-

@Chris

Thanks for this cadence test. I just haven't been seeing the 'judder' problem like others except on certain shots and the so called 'judder' is exactly what I've seen with 35mm over the years when we (usually unintentionally) pan across something detailed while following NOTHING moving with the pan in frame. I saw three films in the theater this weekend and intentionally looked out for shots like this and there were only a few, but each time there was, I saw the "judder" - it's just inherent in the frame rate, has been for decades, that's why we try to avoid medium speed pans without following anything in frame. At high speed you just don't notice it (whip pan) and at slow speed you usually don't notice it. The first time I directed some second unit for a fairly well known director and did a medium speed pan of a city block while following nothing moving the director hit me in the back of the head during dailies and told me never to do it again because it strobed. Nature of the beast. -

@kae

Yup...

It looks like people are using different terms, or sometimes the same terms inappropriately, for the many motion artifacts that can happen. I can see why somebody would call what the video above shows "strobing" or "judder" because that's the closest thing they have seen to it. Digital capture manifests motion artifacts unique to it, so it can be hard to identify exactly what is going on if you try to relate things to what film cameras do. The solution to the effect in the above video is simply to increase bitrate (it was shot with an unhacked GH2). With film, you would never see differences in how motion is reproduced within a single frame, in the digital world it can happen. This can be very confusing at first.

Chris -

I personally never thought the frame rate varied that much. I don't think the flickering has do do with evenness. I also only notice it when comparing the GH13. The GH13 just seems a little smoother. I can test it with my superclock, but even if there is a slight variation I don't think it would account for the effect.

-

@v10tdi pointed out that a rolling shutter would skew this test (good call!). He's right, but I minimized that by making the measurements in a small portion of the center of the frame - the picture I posted is just a small portion of the actual frame. Even better would have been to center the samples across the bottom, but I don't think we need that much accuracy.

Chris -

@chris

"...and the codec can run out of steam toward the lower part of the frame - especially with P and B frames. In that case the codec will simply skip encoding macroblocks because it has run out of bandwidth"...

I understand what you are saying about running out of bandwidth in the lower frame, if the upper part has used most of the available bandwidth, but during the slow zoom towards the logs, where the upper grass detail moves out of frame, there is NO improvement in the details of the running water, the log or the remaining grass. It's still blocky and jerky. The following shot is much better. Strange. Could there be something else going on to cause this? -

I know I'm probably not the norm when it comes to this stuff but i just don't notice the strobing/judder/flickering/whatever stuff. I always notice details and framing first, then acting, lighting, location, etc.

-

A lot of things can be causing this. I admit that I simplified the explanation a little. AVC codecs use a number of strategies to determine how to encode macroblocks, including:

Using neighboring macroblocks as references - this is intra encoding. I frames use this, P and B frames can use this.

Using macroblocks in previous frames as references - P and B frames can do this.

Using macroblocks in later frames as references - B frames can do this.

Using macroblocks in previous frames that are shifted in various directions (motion vectors) as references - P and B frames can do this.

Using macroblocks in later frames that are shifted in various directions (motion vectors) as references - B frames can do this.

Skip encoding the macroblock and just use motion vectors based on previous frames - P and B frames can do this.

Skip encoding the macroblock and just use motion vectors based on later frames - B frames can do this.

Code macroblocks using lower or no detail (macroblocking, or mud) - all frames can do this.

Don't do anything with a macroblock - P and B frames can do this.

The codec works by estimating how much bandwidth it can use at any time and by trying to predict how macroblocks should be encoded. Also a quality comparison test is used. The codec will try to determine the lowest bandwidth demand from the techniques listed above by trying them all and settling on the one that meets quality criteria with the least number of bits. If the quality metric can be met without using all available bandwidth the frames simply come out smaller and the bitrate goes down. If the opposite happens, however, the codec will begin making sacrifices in quality in order not to exceed the allotted bitrate. The result is that the quality can vary in different portions of a single frame, usually meaning that the upper part of the frame is better quality than the lower. It's all very complicated so there isn't one clearly identifiable artifact. For example, motion vectors don't consume much space. If the bitrate reduction strategy that yields the best result is skipped macroblock motion vectors, rather than low-detail encoding, the codec will use that. Both can look bad, but in different ways. In a frame where these compromises have been made you might see the effects of both in different parts of the frame.

Chris

-

@JanH

That slow zoom you refer to was done in post in order to emphasize the problem. I noted that in my original post of the file. The original shot was a lockoff. I can post the original if anyone is interested.

-

That would be nice. Your video is one of the best examples that shows the various things that can happen when the codec fails.

Chris -

@Kae -- what range would be considered a medium speed pan (i.e. seconds for a stationary subject to move from one edge of the screen to another). Had no idea about this rule but very helpful -- thanks for sharing it!!!

-

@Cbrandin

Would you rather have a P frame or I frame if both are the same size? A lot of the videos I have taken that have waving grass and water like the one shown above have P frames and I frames that are the same size. I was wondering if that is a good thing or a bad thing? Would it be better to reduce the GOP value and eliminate the P frames if the I frames stay the same size? -

@chris

Thanks for the detailed explanation. It's amazing (but obvious) that examination of the images, in conjunction with how the AVCHD codec is defined / implemented, will lead you to the parameters that need to be tweaked to get improved IQ. Terrific work by the whole "GH2 improvement" team..

I also didn't appreciate, until @bdegazio indicated, that it was a frame crop I was looking at. My bad... -

@cbrandin "The codec will try to determine the lowest bandwidth demand from the techniques listed above by trying them all and settling on the one that meets quality criteria with the least number of bits."

Thanks for the succinct overview of macroblock encoding strategies. I'm curious how the encoder balances compression quality in the face of its physical contraints: limited video buffer size along with rigid real-time encoding deadlines.

In order to use backward prediction in B-frames, the next I or P-frame must be encoded in advance. This requires the encoder to temporarily buffer unencoded video frame data for each consecutive sequence of B-frames, in addition to maintaining video buffers for two encoded I and/or P-frames. With the standard IBBPBB frame-sequence, the encoder must continuously juggle the video data from four consecutive frames at the same time. As bitrate is increased, the worst-case buffer size required for encoded I and P-frames will increase as well.

With the GH1, there were clearly cases where video buffer overflows (rather than SD card write-speed limitations) were the cause of fatal recording errors. I wonder if GH2 is also vulnerable to buffer overflows, and if it needs or can be patched to eliminate this pitfall?

On the GH1, I also spotted macroblock quality reduction in lower parts of certain frames. In addition to running out of bitrate in the middle of the frame, I suspect that running out of encoding time can also force the encoder to resort to shortchanging macroblock optimization. -

@mpgxsvcd

I'll have to think about that. I imagine it could go either way depending on circumstances. P frames can't really improve on I frame quality very much, so I would consider relatively small I frames in conjunction with big P frames problematic. I know that if the P frames were actually bigger than the I frames I would go with I frames. I think P frames nearly the same size of I frames amounts to wasted coding efficiency.

Chris -

@lpowell

I think the prediction process sometimes doesn't have time to try all possibilities, which is not optimal. I know, for example that it limits the number QP values it tries in I frames to five, and P and B frames are encoded with a single QP value. Obviously there isn't the CPU bandwidth to try more QP values. I assume there are other, more dynamic prediction compromises it makes as well.

I looked at the buffering in the GH2 and it looks like it assumes B frames for all modes (even the ones that don't use B frames) - this is good.

Your comment on the GH1 overflow - amen, I couldn't agree more.

Chris -

The "log video" referenced above by cbrandin is a good example of what I occasionally see.

Would love to see that video with GH1 vs GH2. Need to find some logs.... -

@Lpowell

I think I just figured out where the Gh1 "rogue" frames came from. AVC codecs provide a couple of parameters intended for decoders - a bitrate, and a CPB (coded picture buffer) size. Some decoders use these to configure themselves, some (like StreamParser) don't. There is a bug in the GH2 codec where if the bitrate/1024 goes above 32767, the reported bitrate becomes this huge number. It happens because the code in the codec uses a signed extension instruction to convert to 32 bits instead of an unsigned one. Even with that fix, if the number goes over 65535, you still get a bad number. Some decoders (most, actually) don't rely on these parameters. Technically, however, you are supposed to, and streams with bad values there are not compliant. I think Elecard's StreamEye quite correctly does use these values and that results in the strange "rogue frame" interpretation.

Chris

Start New Topic

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,983

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners255

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,362

- ↳ Panasonic991

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm100

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,961

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips267

- Gear5,415

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,579

- ↳ Follow focus and gears93

- ↳ Sound498

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders339

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320