It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

-

@DrDave The GH2 will not give you any footage outside of 16-235, so it won't have superwhites/superblacks.

Soft clipping, which I think your talking about with your Adobe training courses, works when you have superwhites you want to pull back down into a 16-235 range without pulling everything down. Same goes for pulling superblacks up. You can soft clip to get a specific look too even if you don't have superwhites/superblacks. An over or underexposed shot is just that, you won't have any detail in those areas, just hard clipping.

@_gl Yep, in CS5.5 it does have handy icons that show which of its built-in effects are using YUV and float. Frankly some of the Premiere filters just weird me out, like the gamma correction filter. "The default gamma setting is 10. In the effect’s Settings dialog box, you can adjust the gamma from 1 to 28." WTF doesn't even begin to cover that one.

-

@DrDave. Just try to answer my original question, and you'll find your answers. if your '100 IRE' = Y'235, then your sensor output would be R255G255B255 (rec.709). What RGB values will your Canon sensor output to produce someting thats well over your '100 IRE' level? Or as @balazer puts it: how will your camera make whiter than white?

@Towi. AFAIK, CMOS chips don't capture Y' levels, they capture RGB levels. Then it is converted to a Y'CbCr colorspace. There are several ways of converting between color spaces. You say the Gh2 does some remapping, do you mean that it first converts the RGB signal to Y'CbCr color space (full swing), and then remaps this to Y'CbCr rec.709 (studio-swing)? I really doubt that, as it seems more logical to do the colorspace conversion directly from RGB to Y'CbCr studio-swing.

What about the Chroma, so colors that are not rec.709 compliant, did anyone already see those in their GH2 footage?

-

Adobe YC displays 0-255, and my footage falls between 0-255, but I guess it doesn't really matter whether the parts in the zero-16 and 235-255, plus the superwhites, are real or imaginary, since I presumably would use the same workflow for exposure, first setting the blacks to zero (which they are) and then adjusting the 255 level, then the gamma slider in the middle--all this using any effects tool in Color Corrector that does not remap. You can watch the YC SCope in real time as you make these adjustments. As to why they show up on the scope if the camera cannot create then, I can't answer that, but I doubt they are artifacts. Sotf clipping: in my training course the values are pulled down to 255, not 235, and you can again check the results in real time using YC. Now, the person in the video could be mistaken, of course. But I have had good luck with using the system as it is laid out. IRE:Adobe chooses to map IRE to YC, that's their choice. As soon as they do that, it becomes a digital equivalent.

-

@DrDave Firstly you don't have to set your blacks to zero, nor your whites to 255. Is every shot you take truly going to give you a peak white or black ? Do you really want to stretch your range like that all the time for every shot ? Then you reach for the gamma slider, where does this workflow come from ? Its the same(ish) thing to what @lpowell said to do. What do you do with a shot that contains no black, or no high whites ? Why would you want to take the limited dynamic range of a GH2 and stretch it like that, risking it breaking down. The only time I ever force a black and white point on a shot is when I need to match grade to another shot for integration. And thats lazy of me tbh. Other than that, if you have over exposed and your blacks are too milky then fixing it with exposure/contrast controls is preferable.

Edit : actually, okay, do it this way. Set a black point, then set a white point. But leave gamma (and the midtones) alone, use lift and gain controls to tweak the black up/down and white up/down respectively instead. Change/fix your midtones thru the usual colour correction methods. Or better yet, just drop or push your gamma and leave your whitepoint, black points alone. Heh, sorry, do it the ways you like, ignore me.

"Soft clipping: in my training course the values are pulled down to 255, not 235", there is no way you are getting values higher than 255 out of a GH2, you've done something else to push them there. Basically, though you are expanding a studio swing signal into full swing, fair enough. If you are seeing values below 16 or above 235 with GH2 footage imported straight into Premiere something is going wrong somewhere. Some remapping/reinterpretation, something, is happening. Also, what do you mean by Adobe chosing to map IRE to YC ? YC isn't digital either.

-

Okay, to try and illustrate what I'm saying I've taken a locked off shot and used nukes curve tool to map which are the points in the frame that have the max and min luma values (your white/black points). As you can see both points move around a fair bit during the length of a shot. Now its a good example as the overall changes in the min/max value do not change substantially. So, I guess with this shot using just the YC scope would be 'good enough' to use that approach. Other shots, meh, not so much. Shots with really light blacks or dim low whites, definitely not.

However even with this shot you can see that the actual black point (min value) alters a bit between one frame and another. Likewise in the footage there is a situation where the white point (max value) changes too. Now it doesn't seem like much of a change, but remember these figures are in linear float space, in an RGB scale the change is probably going to be a bit more noticeable. Now you can, as you do, set your white point/black point based on your YC scope but don't you really need to identify at what frames in the footage are your luma peaks and lows ? Am I missing something truly fundamental here ? Obviously looking at how the luma min and max jump around doing this with a pixel sampler is definitely going to be dodgy.

edit : Yeah, It got remapped, my bad, but what I'm saying still stands. Basically you don't want to look at the scope,just set your blackpoint to 16 and your white to 235, and its mapped right.

luma_values_1.png1651 x 776 - 1M

luma_values_1.png1651 x 776 - 1M

luma_values_2.png1659 x 744 - 1023K

luma_values_2.png1659 x 744 - 1023K -

"You say the Gh2 does some remapping, do you mean that it first converts the RGB signal to Y'CbCr full-swing color space, and then remaps this to Y'CbCr rec.709 studio-swing compliant color space? I really doubt that, as it seems more logical to do the colorspace conversion directly from RGB to Y'CbCr studio-swing"

I also don't think that the GH2 remaps to a "Y'CbCr rec.709 studio-swing compliant color space". I don't think that a "studio-swing compliant color space" even exists (am not quite sure, but it doesn't make sense to me). With "remapping" (0-255 to 16-235) I was just referring to the luminance levels … wether the GH2 remapps in RGB ("sensor mode") or in Y'CbCr (on the processed MTS file) I don't know. I assume the latter ...

-

DrDave said "IRE:Adobe chooses to map IRE to YC, that's their choice. As soon as they do that, it becomes a digital equivalent."

O.k., but how do you map IRE to luminance values? 100 IRE is supposed to be white. Adobe mapped 100 IRE to 255. So they already screwed up, 'cause 255 is not always white, depending on what video standard you're using. Professional cameras almost all put white at 235. IRE doesn't become a digital equivalent just because Adobe mapped luminance values to IRE in some arbitrary way.

There's one very good reason to have a digital waveform monitor labeled in IRE, and that's if you are converting between analog and digital and you want to compare the digital levels to the levels on an analog monitor. But that depends on the digital monitor having the configuration options to show the levels correctly for whatever digital standard and whatever analog standard you are using. Vegas actually does that, with a waveform monitor option to interpret digital levels as either 0-255 or 16-235, and the option to set the black point to 0 IRE or 7.5 IRE. The digital monitor is a simulation of what the analog monitor would show.

IRE is a scale that shows you what the underlying signal voltage looks like in a way that relates directly to the luminance values that they encode. (as well as a bunch of other things besides luminance values, like sync and porches) We already have the digital equivalent of that, and it's the Y values themselves, on a scale of 0 to 255. IRE is a well-defined scale for voltages in analog video. Mapping digital Y values to IRE in an arbitrary and non-standard way doesn't help anything.

-

@Stray, yes, every shot I take goes from 0-255, that's because I'm using high-intensity tungsten lighting, and have black, non-reflective music stands and white music paper in every shot. So every shot will run black to white. The GH2, unlike the Canon Camcorders, does not have the dynamic range to capture this footage, and that is where the super whites are important.

-

@DrDave ah right, heh, fair enough then. My concern came from the suggestions from the flowmotion thread being so similar to what you described, I got the impression there was some wacky workflow that had become accepted by some.

-

DrDave, so is your camera full swing, or studio swing with superblacks and superwhites? There's a difference. It sounds like full swing to me.

-

I think anyone who wants to: take their copy of Premiere, or download the free trial, set up two 1000 watt lights and video something really black (angled) and something really white (facing), expose three vid clips bracketed since the histogram overexposes slightly and you lose the blacks (for very good reasons, given the sensor), import into Premiere, uncheck the 7.5 IRE button, set the display level to 100 (to show the transients), turn off the chroma, and take a look. I'm sure--and I mean this--that ppl who have been doing this way longer than me can spot something I might miss. You have to of course use a scene that is brightly lit so that you have to pull down the exposure to get the whites in, otherwise you won't know if you are videoing the full dynamic range.

-

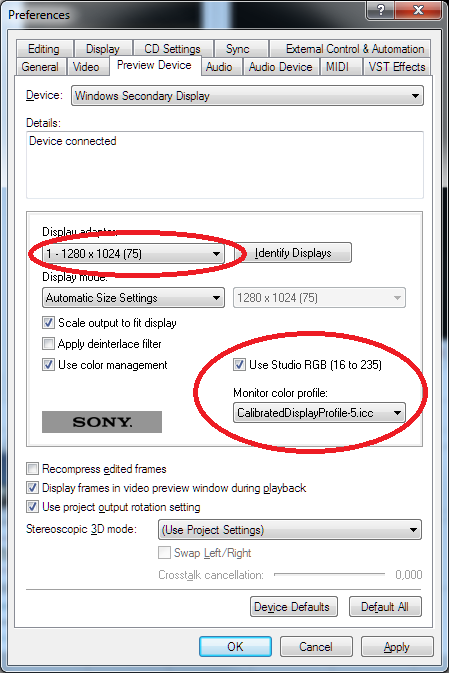

my workflow in vegas pro.

the vibrand profile with stauration on +2 match the 16-235.

Then i ad the sgrb to crgb levels plugin, thats all

oeps, my second monitor was on when taking these screen shots (sorry...

srgb.png2640 x 1024 - 2M

srgb.png2640 x 1024 - 2M

crgb.png2640 x 1024 - 2M

crgb.png2640 x 1024 - 2M -

@towi Why not assume it is not remapping at all, but just converting from the sensor's RGB values directly to the studio-swing Y'CbCr colorspace?

-

@DrDave Stop looking at a digital/analog interpretation, with all its vagaries, and look instead at your RGB values. See if the footage goes from 0-255 or from 16-235. IRE is not RGB. Are you looking at Canon footage ?

-

As far as it goes with Premiere interpreting GH2 footage I did a quick test with Nuke. I used ffmpeg to move an mts file into a mov container using -vcodec copy (cos Nuke won't read mts files) and also exported a sequence of tif stills from the same mts file using Premiere. The results were identical. Is this a valid test though, can someone with more ffmpeg experience confirm this, or will copying into a mov container alter anything else ?

@mozes alt-prtscr will only grab the active window.

-

@Stray RGB values should be going from 0-255, both in studio- and fullswing. You want to look at Y' values, not RGB.

-

@DirkVoorhoeve The footage itself shouldn't be showing any values under 16 or over 235 though right ? We are discussing the interpretation of the GH2 footage in ITU 709 here still yeah ? Am I missing something here, I've had a weird week. Pressing that +7.5v button is going to give you a 'what if it was broadcast' waveform. Correcting out the milky look as people have described is going to move it into full swing, this is alright to do obviously, but you probably want to convert it back if you do intend to broadcast it. If you only have a computer monitor then moving footage into full swing may be the only way to work.

Basically the YC waveform has little to nothing to do with this discussion really.

-

@mozes The problem with your workflow is that Vegas' internal preview is 0-255. So when you extend 16-235 to 0-255 to get the more washed out look you see that in preview screen. But only SEE. When you encode back to YUV, say to mp4 for youtube, Vegas will simply clip those 235-255 colors. You lose highlight information that way. If you need that washed out look, you'd rather adjust curves or lower gamma.

In brief - Vegas's internal preview is RGB hence it's not WISIWYG when it comes to editing YUV video. What you see in preview window is not what you get in rendered file. You need an external monitor to see the 16-235 output in Vegas.

-

@zigizigi my preview is also srgb, if yours is different then check the settings.

Naamloos.png449 x 673 - 61K

Naamloos.png449 x 673 - 61K -

My understanding is that ffmpeg is 16-235, however, it has been several years since I used it and they may well have upgraded that issue. As I recall, there is a patch for swscale for his issue.

-

"towi Why not assume it is not remapping at all, but just converting from the sensor's RGB values directly to the studio-swing Y'CbCr colorspace?"

Because I don't know of a "studio swing Y'CbCr color space". I only know of "Y'CbCr" which basically includes 256 luminance levels (talking 8bit). IMHO the term "studio swing" only refers to the levels utilized for image content ("broadcast save colors") and has nothing to do with a "color space" in the first place.

-

@towi Why not assume it is not remapping at all, but just converting from the sensor's RGB values directly to studio-swing Y'CbCr image content?

-

to do so the camera software has to apply a tone curve

-

"My understanding is that ffmpeg is 16-235"

ffmpeg also converts to fullswing ... it depends on the target codec used for the conversion. (I assume you can also control it with certain settings. I am not quite sure, though.)

-

@towi which of course it would not have to do when converting to full-swing Y'CbCr?

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,993

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,368

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm102

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320