It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

-

There has been some heated discussion on this topic in another thread and I think it might be more useful to start a specific thread for this topic to get to the bottom of this issue/question.

Basically a lot of people have noticed that the GH2s Black & White levels are at 16 and 235 respectively (ITU 709) and not at 0 and 255.

I personally use Sony Vegas and the histogram in Vegas show my GH2s mts files to have black levels at 16 and whites at 235. Others have noticed this too in Avid etc.

The discussion has been: is it deffinately so that that the GH2 records the levels at 16-235 or, is it the different programms which interpret those levels according to their own internl designs.

One important point is the GH2s own histogram. Try shooting with the lens cap on. Prior to pressing record the histogram will show levels to be at zero, but as soon as REC is pressed the histograms level will jump up a few notches (to around 16). In my case looking at the recorded black footage in Vegas or VLC will not show black but a very dark grey on an calliberated computer monitor (0-255). On a braodcast monitor the footage will be black. Also the waveform in Vegas will show the levels to be at around 16. Also the GH2s own histogram on playback will show the levels to be at 16.

(I have tried but can never get my levels to go above or below 16 and 235 in camera; but depending on which session settings I use in Vegas then the program will show the levels to be 16-235 or 0-255, hence the confusion and question.)

What are your findings? Is there any way to be certain exactly what levels are being recorded and not what individual software is making of the levels?

-

Yes, the encoded GH2 luminance levels really are 16-235. To analyze this accurately you want to look at the decoded Y-channel, without any conversion to RGB or other conversion. I've performed this analysis several different ways, and they all match. Attached are images showing line-by-line Y-channel histograms, generated with ffdshow into Avisynth, no color conversion. The brown bars are showing 0-15 and 236-255.

How to properly deal with such footage is another question, having everything to do with what software you're using. I don't believe that Vegas's histogram is accurate.

gh2_histogram.jpg2176 x 1080 - 117K

gh2_histogram.jpg2176 x 1080 - 117K

gh2-histogram_light.jpg2176 x 1080 - 336K

gh2-histogram_light.jpg2176 x 1080 - 336K

gh2-histogram_dark.jpg2176 x 1080 - 196K

gh2-histogram_dark.jpg2176 x 1080 - 196K -

I concur, the GH2 appears to be recording in the range 16-235. The waveform monitor in Premiere Pro shows this to be the case. And I trust this waveform monitor, because I have footage shot with other cameras that does indeed go above 235, and the monitor clearly shows it. But the GH2 always tops out at 235 (100 IRE).

-

Ralph, IRE is a scale of voltages in analog video. It's meaningless in digital video. Adobe might as well have labeled their scope in inches or pounds.

0 IRE is 0 volts. 100 IRE is the voltage of the white level, for whatever video standard and voltage scale you're using. The black level depends on what video standard you're using.

And even if you knew that Adobe had labeled their waveform monitor accurately, you don't know how they are doing the YUV to RGB conversion. That's key, because different software does different amounts of level expansion when converting to RGB.

-

@balazer Yes, but the results match your Avisynth findings, so I think that's a good vote of confidence for Adobe.

-

I use Adobe Premiere purely as an editor, that discussion made me look hard at what Premiere actually does in terms of interpreting footage. Yep, I may do some CC tweaking in Premiere quickly, but I really don't trust it when it doesn't tell me anything. It doesn't let you interpret footage, it just does it (whatever 'it' maybe), and then it doesn't share what its done with you, (which could explain the crap I've had with some DPX files). This bugs me. But my current workflow is working it seems, painful as it is. I export the mts files to a tiff sequence, work in Nuke, bring into Premiere to edit and then render out.

With regards to Premiere what @_gl posted has been the most revealing. http://personal-view.com/talks/discussion/comment/49127#Comment_49127 I guess Adobes other problem is not to give Premiere too much and thereby negate possible AE sales. But _gl doesn't know for sure what Premiere does when it imports footage, and nor do I. Back to the point though, I don't think any of us have found an NLE yet that doesn't interpret GH2 footage correctly (16-235), you'ld notice it visibly if it didn't. However taking what @_gl has posted, it does mean you will have to check what third party plugins actually 'do' when running in Premiere. But you should be doing that in any NLE, Compositor or image processing thingy tbh.

@Ralph_B Yeah, I trust Premieres waveform, I know what you're saying @balazer about the possible vagaries of its YUV interpretation but I think that the same approach is taken by every app in that regard. Speaking as an old analog video head, you're better off staring at RGB Histograms when you're tweaking your footage. Though the waveform is prettier to gaze at.

-

I think much of the confusion comes from an inaccurate understanding of what rec709 (16-235) actually is.

Traditional TVs (the actual monitor/hardware) display black at 16 Y' and white at 235 Y' (8bit nomenclature). So the actual image content is displayed within a range of 16-235 levels.

However technically rec709 compliant video always ranges from 0-255. 0-15Y' provides footroom and 236-255 Y' provides headroom. This is very important to understand: rec709 compliant video contains the full range of levels, however only the range from 16-235 is used for actual image content.

The standards aside, the footroom and headroom can of course also be used for image content if you choose to do so. The only issue here is that levels below 16 Y' and above 235 Y' can not be displayed on a traditional TV monitor. But of course they can be displayed on a computer monitor displaying 0-255 levels.

I am under the impression a lot people think a certain "rec709 16-235" setting in a certain software necessarily means that levels below 16 and above 235 get cut off or that the image is remapped from 0-255 to 16-235 or so. This is not always the case - it depends on the software and the nomenclature of the software manufacturer (and this in fact is a real mess as the term "rec709" is used to describe very different software behavoir). Software that truely supports a consistent rec709 workflow will never cut off the footroom (0-15) and headroom (236-255) as these levels are by definition part of the rec709 standard (for instance footroom can be used for super-black keys). These softwares of course can work within the full range of luminance levels (so 0-255).

I am working on Avid (have been working on Avid for years and have gone through all the mess with changing standards, outdated, updated and new codecs, computer monitors, confusing graphic card drivers … the whole shit) … this is why I mostly refer to Avid and will illustrate the rec709 issue on Avid.

Let's adress the "16-235 image content levels within a 0-255 range" thing …

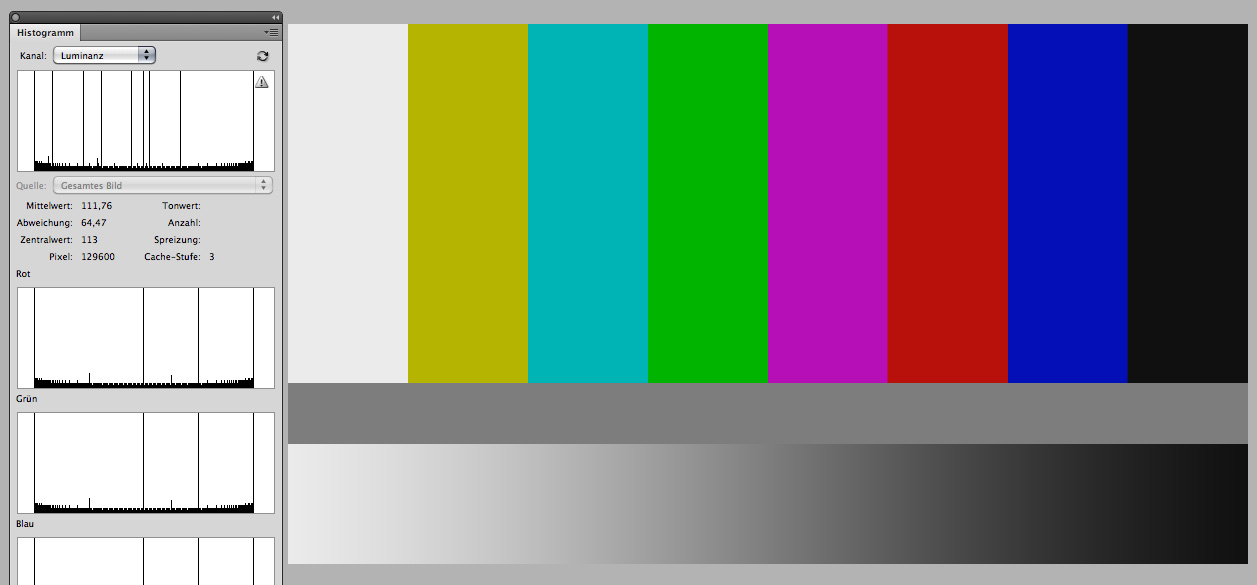

Here's a traditional color bars image, opened in Photoshop. The histogram shows that the image content ranges from 16-235:

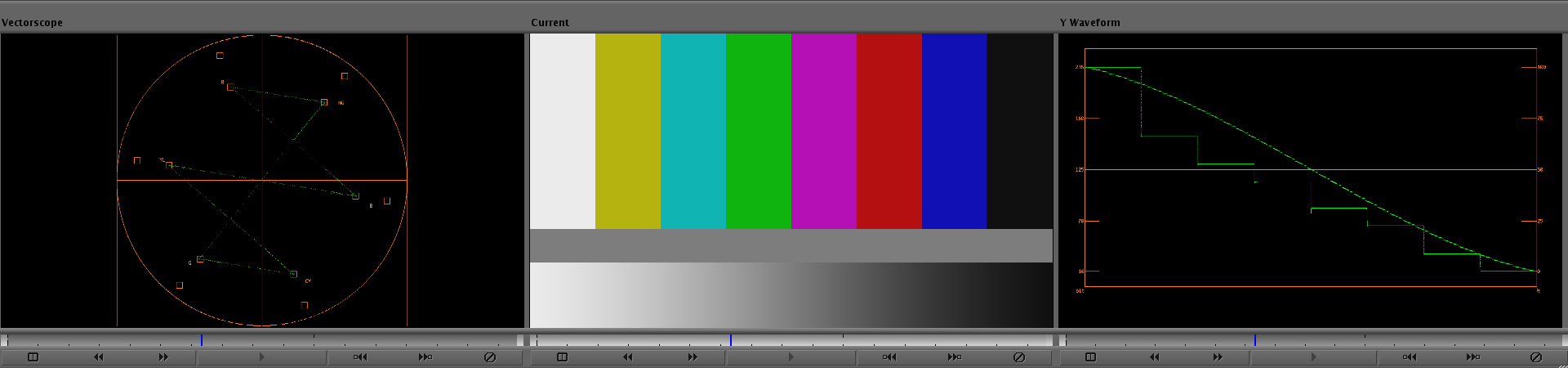

Here's the same image imported into Avid (import setting "rec709"… more on this below). On the left you can see the vectorscope showing 100% accurate colors. On the right side you can see the Y waveform showing the luminance range of the image (16-235). Please note that the Y waveform is designed to display 0-255 levels, but in this case the actual image only contains "legal" levels (so 16-235):

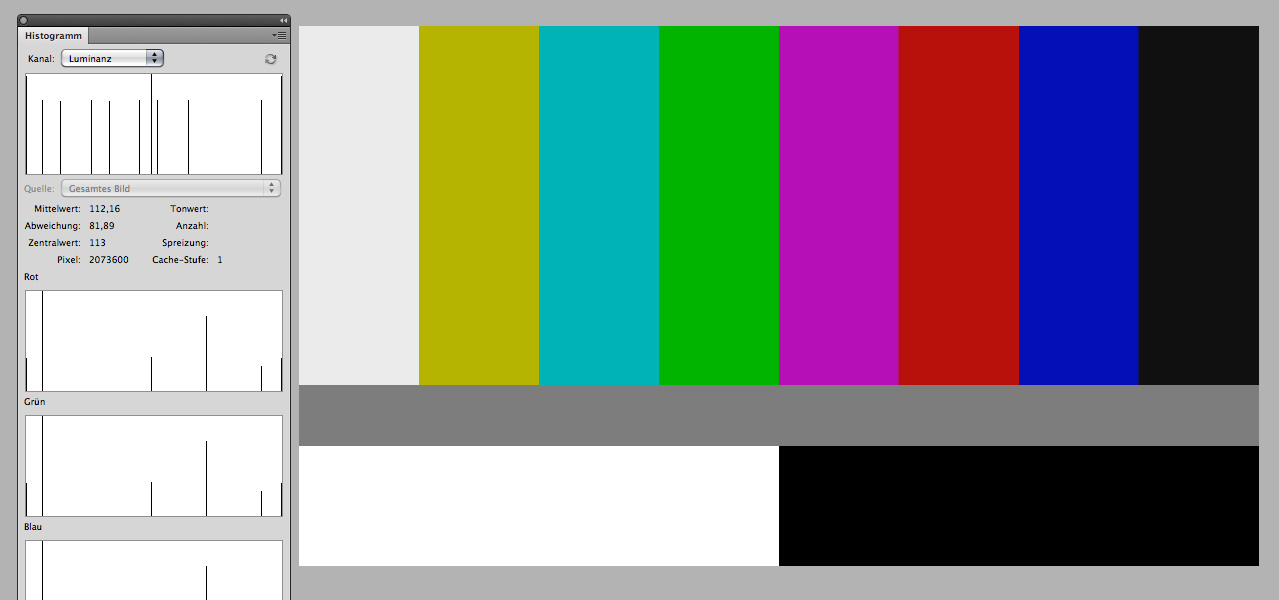

I've modified the color bars. I've added a rectangle with pure black (RGB 0|0|0) and a rectangle with pure white (RGB 255|255|255). This is how the image looks like in Photoshop. The histogram shows the full range of luminance levels (0-255):

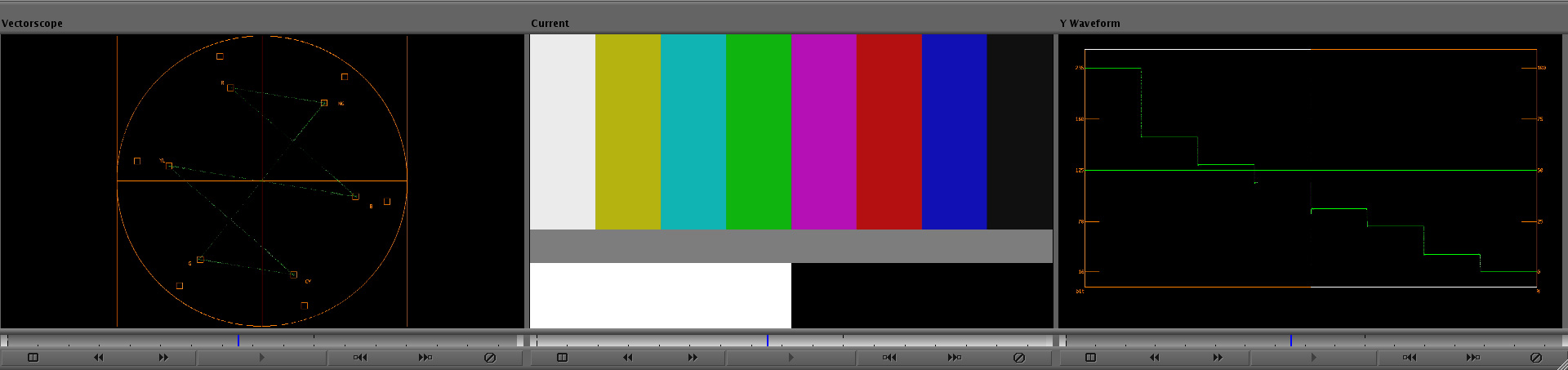

Here's the same image imported into Avid (import setting "rec709"). It is the very same image - only black and white has been added. So we should consider this image being "fullswing" as it utilizes the full range of luminance levels, correct? So please note: although the source image is "fullswing" I've imported the image with said "rec709" import setting.

This is how it looks like in Avid. The vectorscope shows 100% accurate colors and the Y waveform shows the correct values for the TV-black bar on the right (16 Y') and the TV-white bar on the left (235 Y'). The super-black shows the correct value at 0 Y' (white line furthest down to the right) and the super-white at 255 Y' (white line at top of the left):

Full stop. I know this is not easy to understand, so let me recapitulate: I've imported a "fullswing" image with "rec709" import settings and the image displayed in Avid shows the exact same levels as the histogram of the same image displayed in Photoshop. In short: the original levels of the image have been preserved.

Well, of course the full range of levels has been preserved as Avid has to make sure that I don't lose the "footroom" and "headroom" (rec709 nomenclature) of the image. Avid does not care wether I use the footroom and headroom for image content - it simply makes sure that footroom and headroom is preserved. This is why in Avid's nomenclature "rec709" means: "no remapping" / "preserve levels". And this is btw also why Avid of course enables a color consistent workflow with "fullswing" footage (if you consider the rec709 footroom and headroom levels as image content, it's easy to understand)

Avid also provides an import setting "RGB (0-255)". This (and only this) setting will apply remapping - it will squeeze fullswing levels into studio swing levels. So this setting is aimed at remapping 0-255 image content into the range of legal broadcast levels. You can use it for instance to compress a photo into legal broadcast levels on import.

Now, back to the GH2. I have yet to see a clip of the GH2 where the GH2's RGB histo (in playback mode) does not match Avid's RGB histo of the respective file (MTS imported into Avid without remapping). Actually I don't need Avid (or any software) to verify the levels of the GH2's video … the histos of the GH2 alone say it all (IMO). Of course you can say the GH2 does not know how to display the levels of its own video. However the fact that Avid "reads" the same levels as the GH2 (and some other softwares I use also show the very same levels all the time) encourages me to believe that the GH2 displays the correct levles on its histo. (Which makes sense anyway… photo cameras do a lot of silly things… but they are all able to display the correct levels of the captures they've produced themselfes.) The GH2 remapps to 16-235 in video mode: http://www.personal-view.com/talks/discussion/comment/47583#Comment_47583

colbars.jpg1257 x 585 - 57K

colbars.jpg1257 x 585 - 57K

colbars_rec709_wf_vs.jpg1920 x 450 - 107K

colbars_rec709_wf_vs.jpg1920 x 450 - 107K

colbars_mod.jpg1279 x 600 - 56K

colbars_mod.jpg1279 x 600 - 56K

colbars_mod_rec709_wf_vs.jpg1920 x 453 - 99K

colbars_mod_rec709_wf_vs.jpg1920 x 453 - 99K -

I agree with everything that towi said. But to make one point more clear:

If your NLE software maps the GH2's YUV color values straight across to RGB values with no level expansion (0-255 to 0-255), and then shows you that RGB, you are seeing the video displayed inaccurately. The camera's black will be grey on your screen, and the camera's white will be less than white.

If you have such an NLE and you want to do any color correction, you'll want to first make sure you are seeing the GH2's levels displayed accurately by correcting in your NLE, in the display adapter, or in the display.

But if you're not doing color correction, you can probably ignore this whole problem. If the NLE writes files out using conversion that's the inverse of the conversion it uses when reading the files in, everything will be correct when you go to play the output with a player that uses levels correctly.

The NLE is doing right by your video in (mostly) preserving the range of levels, but doing wrong by it by not displaying it correctly. Players are supposed to map the levels correctly, and the good ones do. In my opinion it's a real flaw in many of the NLEs that they have no switch to say "interpret this video as rec.709" and then do the appropriate YUV to RGB conversion with level expansion for displaying the video. The benefit of preserving the full range in the NLE becomes moot if the NLE were interpreting the footage correctly in the first place, since levels outside of 16-235 will be clipped by a proper player & display combo.

Incidentally, here is ffdshow's YUV to RGB conversion settings screen. These options cover pretty much the whole gamut for handling different video inputs correctly. If you want to use this to play GH2 videos with accurate levels, first disable all of the YUV output modes in ffdshow, and then set the RGB conversion settings as shown here.

ffdshow_rgb.png569 x 473 - 21K

ffdshow_rgb.png569 x 473 - 21K -

"The camera's black will be grey on your screen, and the camera's white will be less than white"

on your computer screen! On your TV (or broadcast compliant calibrated) screen it will look correct "out of the box".

-

I only have a computer screen. :)

-

Agree with everything said here. Well put! @Stray brings up a damn good point "it does mean you will have to check what third party plugins actually 'do'"

It's no doubt smart to check your waveform monitor when enabling plugins.

@Towi > "On your TV (or broadcast compliant calibrated) screen it will look correct "out of the box"."

Yep, that's pretty much it. Studio standard: 16-235

-

Balazer, > ""I don't believe that Vegas's histogram is accurate..""

Sony Vegas Pro has always been accurate for me.

vegas1.JPG436 x 605 - 57K

vegas1.JPG436 x 605 - 57K

vegas2.JPG391 x 561 - 37K

vegas2.JPG391 x 561 - 37K -

Rambo, thanks for the Vegas tip. But I've found the preview color management setting only works when you full-screen the preview. (not as helpful if you only have one monitor connected)

-

My Canon covers the full range plus superwhites, so I will shoot the same scene with the GH2 and compare them.

Setting up the scope in Premiere: uncheck the 7.5 legacy box, uncheck chroma, set the 50 percent slider to 100 to pick up the faint detail, then you have 0-255. My GH2 and GH13 both display the full range from zero to the superwhites.

-

@Siddho maybe update your information about Vegas display with the screenshots Rambo made, for other readers.

So to see if I understand correctly: when recording, the GH2 does convert the RGB information from the sensor to Rec.709 compliant Y'CbCr signal (black R0,G0,B0 will be converted to Y'16Cb128Cr128). On playback, you will want your screen to display this Y'16Cb128Cr128 as black, so R0G0B0. You will need to set this right in your player / editor / screen. Does that sum it up?

@DrDave. How could your Canon cover superwhites? If Y'235 level is R255G255B255 from your sensor (full exposure), what RGB values would you need to get Y' to be 255?

-

balazer >@Rambo, thanks for the Vegas tip. But I've found the preview color management setting only works when you full-screen the preview. (not as helpful if you only have one monitor connected.

What serious editor doesn't have a second monitor for full screen preview, and calibrated at that? My eyes need BIG these days :-) (the screen caps were from my laptop not my main system)

-

However taking what @_gl has posted, it does mean you will have to check what third party plugins actually 'do' when running in Premiere.

Actually it's not just 3rd party plugins - not all of the in-built effects support YUV or FLOAT either. That also depends on your Premiere version, newer versions are adding more support. In recent versions YUV and FLOAT capable plugins are labelled as such (you can search for them too I think - I'm vague because I haven't used any built-in effects for a while, I do everything with my own).

EDIT: I forget to mention that FLOAT pixelformats are normally only used if you tick the 'Maximum Bitdepth' tick box, eg. in Sequence Settings and when you're exporting. Again I'm vague as I haven't used float much lately (I tend to stick to 8bit YUV as float is slower), and again the exact behaviour may depend on your Premiere version.

-

@DirkVoorhoeve by superwhites I mean well over 100 IRE

-

@DrDave if your '100 IRE' = Y'235, then your sensor output would be R255G255B255 (rec.709). What RGB values will your Canon sensor output to produce someting thats well over your '100 IRE' level?

-

I'm assuming the cam records the full range 0-255 since that's what I see on the scope, and I'm assuming that the superwhites are what I've been told along, which is detail that can be brought down into the visible range in post.

-

I will say it again for those who weren't paying attention... IRE is a scale of voltages in analog video. It is completely meaningless for digital video.

How much sense would it make to describe the luminance of some digital video as 0.5 V? Of course it doesn't make any sense.

Some NLEs have waveform monitors labeled in IRE. But these are merely a simulation of what an analog waveform monitor would show. And that simulation is only accurate if a number of import, project and/or monitor settings are all made correctly. And even then, interpretation of IRE values would need to be made with respect to some analog video standard. Different analog video standards map luminance values to voltages differently.

If you want to talk IRE, you must qualify it by saying which software package you are using, which monitor screen you're looking at, and how your import, project, and monitor settings are set.

Otherwise, for digital video, if you want to talk about what is encoded in the video, the only things that make sense to talk about are the unencoded YCbCr or RGB values. And since the GH2 encodes YCbCr values, if you want to talk RGB, you must say how the YCbCr values were converted to RGB. There are lots of different ways of converting.

-

Superwhites are whites whiter than white. The GH2 does not generate superwhites, except perhaps for spurious superwhites just slightly above white due to compression noise or signal processing noise.

The Canon camera, I believe, does not generate superwhites either. The Canon just happens to define its white point at Y=255, compared to Y=235 for the GH2.

-

"The Canon camera, I believe, does not generate superwhites either. The Canon just happens to define its white point at Y=255, compared to Y=235 for the GH2"

I have no Canon camera or Canon files at hand right now... but if I remember correctly Canon video files are fullswing.*

Regarding hybrid cameras I think talking about generating certain levels is not really accurate. I think photo cameras always capture the full range of luminance (0-255, just like in photo mode). The question is how the sensors full range signal is encoded into the video file in camera. The GH2 remapps its MTS files to 16-235 in camera (which I assume is the reason for the GH2's banding issue). Canon cameras do not remap... they pass the full range of luminance into their video files. So if you want so: Canon video files contain super blacks and super whites (= levels above 235 Y' and below 16 Y').

*edit: just found some 5D2 video files... yes, they are fullswing

-

But towi, that's my point: if a camera uses full swing, black is zero and white is 255, so there is nothing blacker than black and nothing whiter than white. Those cameras don't have superblacks and superwhites. Superblacks and superwhites can only exist when the camera and the video standard allow for something blacker than black and whiter than white. Rec.709 allows for them. Analog video standards with setup allow for them. But the GH2 doesn't generate them.

If you incorrectly interpret full-swing video as studio swing, sure, you just got yourself some superblacks and superwhites. You also just got yourself some incorrectly interpreted video.

-

"But towi, that's my point: if a camera uses full swing, black is zero and white is 255, so there is nothing blacker than black and nothing whiter than white. Those cameras don't have superblacks and superwhites"

ah, okay - I've got it! Yes, that's correct, of course.

-

@balazar the Premiere scope is set to IRE100=255. They had to set it to something. Now Adobe could be wrong, but all my video maps out exactly as it should. As for the superwhites, since they have visible detail in them, I doubt that they are artifacts. However, if you know for sure that Camcorders as well as the GH1 and the GH2 do not in fact use superwhites, I would love to see a source for that. I just assumed it was pretty standard these days, and there is a whole chapter on how to restore overexposed footage using superwhites in my Adobe training courses.

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,993

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,368

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm102

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320