It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

-

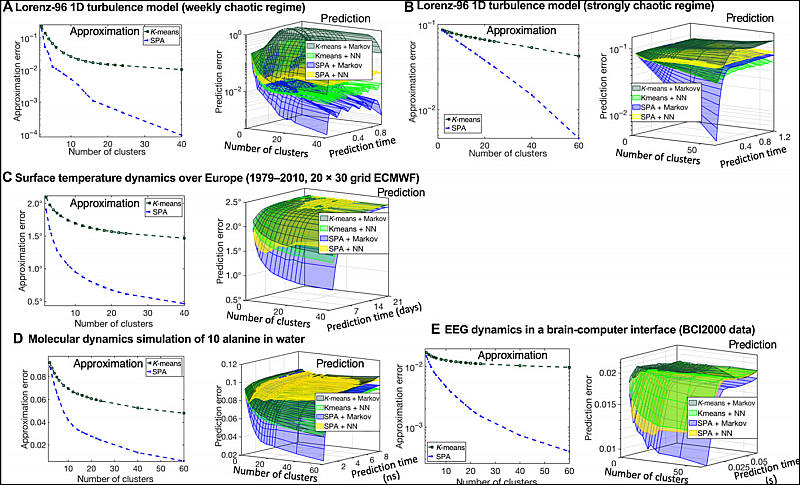

Computational costs become a limiting factor when dealing with big systems. The exponential growth in the hardware performance observed over the past 60 years (the Moore’s law) is expected to end in the early 2020s. More advanced machine learning approaches (e.g., NNs) exhibit the cost scaling that grows polynomial with the dimension and the size of the statistics, rendering some form of ad hoc preprocessing and prereduction with more simple approaches (e.g., clustering methods) unavoidable for big data situations. However, these ad hoc preprocessing steps might impose a strong bias that is not easy to quantify. At the same time, lower cost of the method typically goes hand-in-hand with the lower quality of the obtained data representations (see Fig. 1). Since the amounts of collected data in most of the natural sciences are expected to continue their exponential growth in the near future, pressure on computational performance (quality) and scaling (cost) of algorithms will increase.

Instead of solving discretization, feature selection, and prediction problems separately, the introduced computational procedure (SPA) solves them simultaneously. The iteration complexity of SPA scales linearly with data size. The amount of communication between processors in the parallel implementation is independent of the data size and linear with the data dimension (Fig. 2), making it appropriate for big data applications. Hence, SPA did not require any form of data prereduction for any of the considered applications. As shown in the Fig. 1, having essentially the same iteration cost scaling as the very popular and computationally very cheap K-means algorithm (16, 17), SPA allows achieving substantially higher approximation quality and a much higher parallel speedup with the growing size T of the data.

sa12295.jpg800 x 485 - 101K

sa12295.jpg800 x 485 - 101K

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,993

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,368

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm102

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320